I’ve blogged a lot about my admiration for Amazon’s web services stack. I think they understand the web as well as any company in the world. It’s always been my intention to investigate Amazon’s Electronic Compute Cloud (EC2) and since I needed hosting for my new Clockwork Web Framework, I decided to give it a try.

The reason I went with Amazon rather than a traditional hoster is that I have no idea what kind of interest there will be in the framework, and therefore cannot predict what the load on a web server will be. Amazon EC2 is designed for this kind of flexibility, and you pay per hour.

The Platform

I am running a small Windows Server 2003 32x server instance to begin with. It only has 1.7 gb of RAM. I can scale this up if I need to, or more likely I will run up another small instance and load balancing the two using Amazon’s Elastic Load Balancer technology.

On this, I am using IIS 6, .NET 3.5, SQL Server 2005 Express, and Powershell. Most of my files are kept on a permanent storage drive (more on this below) and served by IIS. In order to maximize the speed and lower the CPU burden on the server, I have decided to use another Amazon technology, CloudFront.

CloudFront Content Delivery Network

CloudFront is a Content Delivery Network (CDN), like Akamai or Limelight. I use it to serve my images and resource files. Basically Amazon has edge servers all over the world with a copy of my images and resource files, and when users request them from my website, CloudFront automatically sends them a copy from the nearest location to them, making for some very fast download times.

To make this work, you have to use Amazon Simple Storage System, or S3. This is a virtual file system. Basically you have “buckets” of files that are served up when requests come in from the CloudFront “distributions”.

I’ve optimized it a bit by having two distributions; one for images and one for resources. This means that a page which requires both things will load even faster since two parallel CDN distributions are processing the files at the same time.

You can create CloudFront distributions through code, or through Amazon’s web management portal.

Since you can control the public URL of the distributions, you will notice if you view the properties of my website that my images are handled by the path “http://images.clockworkwf.com” and my resource files are handled by the path “http://resources.clockworkwf.com” . In other words, I have full control over what path I give them. Most people will never know these picture are being served from Amazon.

Since you can control the public URL of the distributions, you will notice if you view the properties of my website that my images are handled by the path “http://images.clockworkwf.com” and my resource files are handled by the path “http://resources.clockworkwf.com” . In other words, I have full control over what path I give them. Most people will never know these picture are being served from Amazon.

I notice the website loads really quickly, so the CloudFront makes a big difference.

EC2 Hosting Challenges

So that’s the high level architecture. There are a number of impacts when using Amazon as a hoster I’d like to talk about.

Server Goes Up, Server Goes Down

To begin with, you have to assume that at any moment your server will go down. If your server dies, it vanishes, and you have to “spin up” another one, using the web interface or code. It’s very easy to do from the web console, just click “Launch Instance” and you can pick any server ranging from Ubuntu Linux to Windows 2003 Server 64x Enterprise R2.

Although the server instances you can use have their own hard drive space on C: and D: drives, you have to treat that as transitory storage.

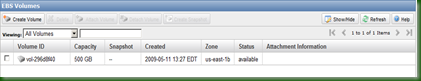

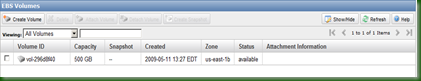

I’ve setup my system in such a way that I can use an Elastic Block Storage (EBS) hard drive volume, provided by Amazon.This is a more permanent drive space that you pay for, but can be attached to any server instance. Think of it as a SAN (that’s probably what it is).

So I’ve got my database and web files on this EBS block, which I then mount to any server instance I’m currently running.

On the server instance, I simply point IIS web server to the EBS block files, and away we go.

The EBS can be any size you like, and you pay per GB per month. Right now I’m using 10GB since my log files and database don’t take up much room. I can add more space later if I need to.

Here’s a screenshot of that EBS volume, in the Amazon web console.

Dynamic DNS Entries

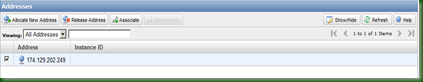

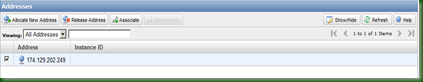

Next problem: Since the server can go down at any moment, DNS is a problem. If my server dies and I spin another one up, it will be given its own IP address, which my DNS entry for www.clockworkwf.com wouldn’t know about. So there might be a long delay while DNS changes to the new IP address.

So, I’m using a Dynamic DNS service called Nettica. They have a management console where I can enter my various domain records and assign a short Time To Live (TTL), which means the DNS entries update frequently. So if my server dies, I can change the entry in Nettica to point to the new server’s IP address, and within a few seconds requests are going back to the right place.

Nettica even allows me to control all of this through C# code. Going forward I plan to write powershell server management scripts that can automatically spin up a new server on Amazon, determine the IP, and register that with Nettica.

Incidentally, Amazon EC2 allows you to buy what are called “Static IP Addresses”. Essentially you can “rent” a fixed IP address which can by dynamically allocated to a server instance. So, in the short run this makes life easier for me as I have rented one, used that for my Nettica domain name record, and can assign this fixed IP to any new server instance.

Next problem: Disaster Recovery.

Disaster Recovery is even more important in Amazon EC2 world than elsewhere, since again your instances could die at any moment….Not that they will, but the point is, they are “virtual” and Amazon isn’t making any promises (unless you buy a Service Level Agreement from them).

However, Amazon’s EC2 provides a level of DR by its very nature – you can spin up another machine in a small amount of time. Estimates for new Windows instances are about 20 minutes.

There’s also something called an Availability Zone. Essentially it means “Data Centre” – Amazon has several of these and so you can spread your servers around between US – East, US-West, Europe, and so on. So when that Dinosaur-killing comet hits North America, the Europe Availability Zone keeps chugging.

Right now I’m not really doing much with my database, so DR isn’t such an issue. I have some security since my files are on an EBS block. However, eventually I’ll setup a second server in another availability zone and load balance the two.

Another Challenge: Price

Amazon Web Services are flexible, and you are charged per hour, for only what you use. This is an amazing model but it doesn’t work so well for website hosting, because of course your servers are supposed to be online 24/7, 365 days a year.

It’s hard to tell for sure what the annual bill will be, but for my small server instance (remember, only 1.7 Gb of RAM) it will cost well over $1,000. That’s a lot more than shared space on a regular hosting provider. However I’m willing to pay this, for the flexibility I get, and also because I think Amazon web services are a strategic advantage and so the earlier I learn about them, the more business opportunities I might unlock.

One good thing is that Amazon has been aggressively dropping its prices as it improves its services. Additionally, they have started offering “Registered Servers” – basically a pre-pay option for 1-year, 2-year, and 3-year terms. Unfortunately these are only for Linux servers at the moment but hopefully they will add them for Windows and then I can save money year on year.

CloudHost Monitoring

Amazon offers a web-based monitoring option for its server instances. I’ve started using it (for an additional fee) but I’m not sold on its utility yet. I don’t think I’m using it to its full potential yet – it is supposed to help you manage server issues by monitoring thresholds.

Managing S3 Files Using Cloudberry Explorer

I needed an easy way to create and manage my buckets, CloudFront distributions, and S3 files. I found Cloudberry Explorer, and downloaded the free version of it. I was able to drag and drop 1600 files from my Software Development Kit to the S3 bucket where I’m serving the resources. Super!

There’s a pro version I might purchase which would allow me to set the gzip encryption and other properties on the files. This would help lower my bandwidth costs and speed up the transfer a bit.

Here’s a screenshot of Cloudberry in action:

I love how easy it is to setup and use Amazon’s web services stack. I think they have a great business model for the Cloud, and they’re the company to beat. I’m willing to rely on them for the launch of Clockwork Web framework and so far I haven’t been disappointed.